Strategies for decreasing the number of queries in a Laravel app

Oh Dear is all-in-one solution to monitor your site that my buddy Mattias Geniar and I have created. It can monitoring uptime, certificates, broken links, scheduled jobs, and much more.

Under the hood, Oh Dear is a large Laravel application that performs many queries all of the time. To power future features, we've recently changed our database structure and refactored some pieces in our code base. We increased performance by decreasing the number of queries.

In this blog post, we'd like to to share some techniques that might be helpfull to increase the performance of your Laravel app too.

Our MySQL server does a lot of work

Since we've launched our service a couple of years ago, we've steadily seen our service grow at a nice pace. In our early days, we only monitored a handful of websites. This didn't result in any significant load on the server we were using at the time.

Right now, we are monitoring tens of thousands of sites. For each of those sites we perform multiple checks: uptime, certificate health, broken links, mixed content, scheduled jobs... Before our optimisation improvements, at peaks we performed about 4 000 queries per second for all of these sites and checks.

When coding up Oh Dear initially, we weren't really focused on performance. We didn't think our service would become as big as it is now. We were not really worried about the amount of queries. So, when we needed a piece of data in a check, we just performed a query. This works when you only have a couple of sites to monitor, but at the current amount of sites we monitor, this was becoming problematic.

We have a beefy server that can manage our current load. We were worried that performance problems would hit us hard with our current growth at some point in the near future. So the past few months, we've made changes to decrease the amount of queries.

Decreasing the query count when dispatching new runs

For each type of check, we have an Artisan command that will dispatch a run to perform that check. The command loops over each team. For each of the sites of team we'll get the Check model that represents a check and has all the customisation options for a check. Here's the simplified code.

class DispatchNewUptimeRunsCommand extends Command

{

protected $signature = 'ohdear:dispatch-new-runs:uptime';

public function handle()

{

$this->info('Dispatching new uptime check runs ...');

Team::with('sites', 'sites.checks')->each(function (Team $team) {

if (! $team->hasActiveSubscriptionOrIsOnGenericTrial()) {

return;

}

$team

->sites

->each(function (Site $site) use ($team) {

$uptimeCheck = $site->getCheck(CheckType::UPTIME());

// dispatch a run that will perform the actual check

Before dispatching a run of a check, we first verify if a team is subscribed using that hasActiveSubscriptionOrIsOnGenericTrial function. It is executed a lot of times: for each team in our database multiplied by the amount of checks we have. Let's take a look it's implementation.

public function hasActiveSubscriptionOrIsOnGenericTrial(): bool

{

if ($this->onGenericTrial()) {

return true;

}

return $this->hasActiveSubscription();

}

It should be easy to grasp what is going on in the snippet above. In the onGenericTrail and hasActiveSubscription functions, multiple queries are being performed.

We can greatly decrease the amount of queries by caching the result of this function.

public function hasActiveSubscriptionOrIsOnGenericTrial(): bool

{

$key = "hasActiveSubscriptionOrIsOnGenericTrial-team-{$this->id}";

return cache()->remember($key, 3600, function () {

if ($this->onGenericTrial()) {

return true;

}

return $this->hasActiveSubscription();

});

}

For each team, we are now going to determine the subscription status only once on hour. This change will lead to tens of thousands less queries in an hour.

The only downside that this takes is that we potentially keep on running checks for teams without a subscriptions a couple of minutes longer than needed. But the decrease in queries is worth that downside.

Decreasing the amount of queries performed in a run

Before our performance improvements, to run a check we simply created a Run model with a status of pending. Here's the simplified code for the uptime check.

// in DispatchNewUptimeRunsCommand

$check = $site->getCheckOfType(CheckType::UPTIME());

// create a run model

$run = Run::createForCheck($check);

dispatch(new PerformUptimeCheckJob($run));

In the PerformUptimeCheckJob the actual check is being performed. Inside of that job we performed multiple queries to retrieved the check, site, notification preferences, ... The job would also update the run to save the results of the check.

This way of running a check isn't only used for the uptime check, but for all checks Oh Dear performs. Inside the PerformUptimeCheckJob (and other type of jobs) only a few queries were executed. As Oh Dear executes tens of thousands of runs per minute, those few queries in a single run lead to a lot of queries in total.

To minimise the amount of queries performed inside a job, we converted our code to make use of what we call, an InMemoryRun class. That class is basically a DTO that has all the necessary information to perform a run of a check.

class InMemoryUptimeRun extends InMemoryRun

{

public function __construct(

public int $checkId,

public string $uptimeCheckLocation,

public string $checkUrl,

// other pieces of info needed by a run

}

Here's the simplified version of the refactored DispatchNewUptimeRunsCommand where the InMemoryUptimeRun is used.

// in DispatchNewUptimeRunsCommand

$check = $site->getCheckOfType(CheckType::UPTIME());

// make an in memory run DTO

$inMemoryRun = $check->makeInMemoryRunForCheck($check);

dispatch(new PerformUptimeCheckJob($inMemoryRun));

Because that PerformUptimeCheckJob now gets an object that contains all the bits of info it needs to perform a check,PerformUptimeCheckJob doesn't need to execute queries itself anymore. The total number of queries performed in jobs drastically decreased.

Also notice that in the previous we were creating a Run model for each performed in DispatchNewUptimeRunsCommand. In the refactored code we don't store Run with a state of pending anymore. In the PerformUptimeCheckJob we simply create a Run model with the results. Avoiding creating a Run with a pending status in DispatchNewUptimeRunsCommand results again in tens of thousands less insert queries.

Prevent queries by optimizing circular relationships

After reading the section above, you might think that we've simply moved executing queries to get the Team, Site models, ... from inside the run job, to the command that schedules those jobs.

In our command, we were already getting Team and Site as we are looping over all teams and sites. The Check model has a method makeInMemoryRunForCheck that creates that InMemoryRun discussed in the previous section. Under the hood, it will use the relation to a site and team to retrieve the needed bits of info on those models.

To avoid Check from performing a query to load its relations, we can reuse the instances of teams and sites we got when looping over all teams and sites. These instances can be set using Laravel's setRelation method.

class DispatchNewUptimeRunsCommand extends Command

{

protected $signature = 'ohdear:dispatch-new-runs:uptime';

public function handle()

{

$this->info('Dispatching new uptime check runs ...');

Team::with('sites', 'sites.checks')->each(function (Team $team) {

if (! $team->hasActiveSubscriptionOrIsOnGenericTrial()) {

return;

}

$team

->sites

->each(function (Site $site) use ($team) {

$check = $site->getCheck(CheckType::UPTIME());

// prevent performing a query when using the relations

$check

->setRelation('site', $site)

->setRelation('site.team', $team);

// make an in memory run DTO

$inMemoryRun = $check->makeInMemoryRunForCheck($check);

dispatch(new PerformUptimeCheckJob($inMemoryRun));

If you want to know more about setRelation and circular references, check out this excellent blog post by Jonathan Reinink.

Using DynamoDB to increase performance

Oh Dear can monitor scheduled tasks as well. This works by letting our users define their tasks in our UI (or via our schedule monitor package) and letting scheduled tasks pinging our monitoring endpoint when they end. When a ping doesn't come in on time, we send a notification to our user.

This way of monitoring results of a lot of HTTP requests being sent to our monitoring endpoint in a very short timespan. To handle this load we had decided to host that endpoint on AWS Lambda (via Laravel Vapor).

We require each request to use a specific UUID in the called URL to filter out any unwanted requests. In a previous version of our code we kept all valid UUIDs in the MySQL DB and we made a query whenever a ping request comes in.

Here is the route binding of cronCheckUuid that was used in the routes that handle incoming ping request.

protected function registerRouteModelBindings(): self

{

Route::bind('cronCheckUuid', function (string $cronCheckUuid) {

if (! $cronCheckDefinition = CronCheckDefinition::findByUuid($cronCheckUuid)) {

abort(Response::HTTP_UNAUTHORIZED, 'not authorized');

}

return $cronCheckDefinition;

});

return $this;

}

Under normal circumstances, this worked just fine. But our endpoints that handle incoming pings receive so many requests that the db sometimes isn't fast enough, resulting in failed requests.

A MySQL database can often be the bottleneck in a serverless application. In most cases, it's a good idea to avoid using a MySQL database on high traffic endpoints. In our case, we avoid making a query by leveraging DynamoDB, a high performance key-value storage that's optimised for read operations. Laravel Vapor uses DynamoDB as its default store for caching.

To leverage DynamoDB in our Laravel app on Vapor, we first created a new cache store.

// in the `stores` key of `config/cache.php`

'cron-check' => [

'driver' => 'dynamodb',

'key' => env('AWS_ACCESS_KEY_ID'),

'secret' => env('AWS_SECRET_ACCESS_KEY'),

'region' => env('AWS_DEFAULT_REGION'),

'table' => 'cron-check',

'endpoint' => env('DYNAMODB_ENDPOINT'),

],

Next, we created a value object CachedCronCheckDefinition which has a save method that will write its values to DynamoDB.

// in the save method of `CachedCronCheckDefinition`

public function save(): self

{

Cache::store('cron-check')->set("cron-check-definition-{$this->uuid}", json_encode($this->allProperties));

return $this;

}

We still want to use MySQL as our source of truth, so we can easily make modifications using Eloquent. We sync a CronCheckDefinition in MySQL with a CachedCronCheckedDefinition in DynamoDB using a couple of model lifecycle hooks.

// in the `CronCheckDefinition` model

public static function booted()

{

static::saving(function (CronCheckDefinition $cronCheckDefinition) {

try {

(new CachedCronCheckDefinition(

$this->attributesToCache()

))->save();

} catch (Exception $exception) {

report($exception);

}

});

static::deleting(function (CronCheckDefinition $cronCheckDefinition) {

try {

CachedCronCheckDefinition::find($cronCheckDefinition->uuid)?->delete();

} catch (Exception $exception) {

report($exception);

}

});

}

Finally in our RouteServiceProvider, we update the binding to make use of the CachedCronCheckDefinition.

Route::bind('cronCheckUuid', function (string $cronCheckUuid) {

if (! $cronCheckDefinition = CachedCronCheckDefinition::find($cronCheckUuid)) {

abort(Response::HTTP_UNAUTHORIZED, 'not authorized, are you sure the UUID is correct?');

}

return $cronCheckDefinition;

});

With this set up, the controller the handles incoming pings will have the CachedCronCheckDefinition injected, and no single query to MySQL gets made.

We're aware that CachedCronCheckDefinition and the way it is set up is a bit verbose, and we could easily hide the inner workings a bit. As avoiding a MySQL query is so important, we think that it's good to let the naming of things hint that the cache is being used.

Avoiding making a query improved the performance of our app drastically. In this tweet you'll find some screenshots from our Grafana installation. I've omitted the actual numbers in these screenshots, but you can easily see the trend.

Made some changes at the Lamda endpoint that receives the ping from all scheduled commands at @OhDearApp

— Freek Van der Herten 🔭 (@freekmurze) September 6, 2021

Refactored so that MySQL isn’t used, instead we leverage DynamoDB now.

Result: much faster endpoint, less concurrency needed. Here are some graphs from Grafana. pic.twitter.com/KPdRKrzPry

If you want to learn how to use Grafana on AWS, check out this blogpost by Michael Dyrynda who also helped us setting all of this up.

In our test suite we have multiple tests to make sure the HTTP endpoints that receive the pings are working correctly. In those test we use a function assertNoQueriesExecuted that makes sure that no single query is being executed. The implementation of that assertion is pretty simple.

protected function assertNoQueriesExecuted(): self

{

$this->assertCount(0, DB::getQueryLog());

return $this;

}

You can see that this assertion makes use of Laravel's query log. In order for this assertion to work, you must enable the query log. We did that by adding DB::enableQueryLog to the setUp method of our base test case.

Just in time upgrades

Besides making performance optimisations, we made some other changes that will make it easier for us to build new features. One of those changes is that we made a change in how we store results on the runs table. That table has a column results where the results of a particular run are saved. We wanted to change the format in how the results are stored in that column.

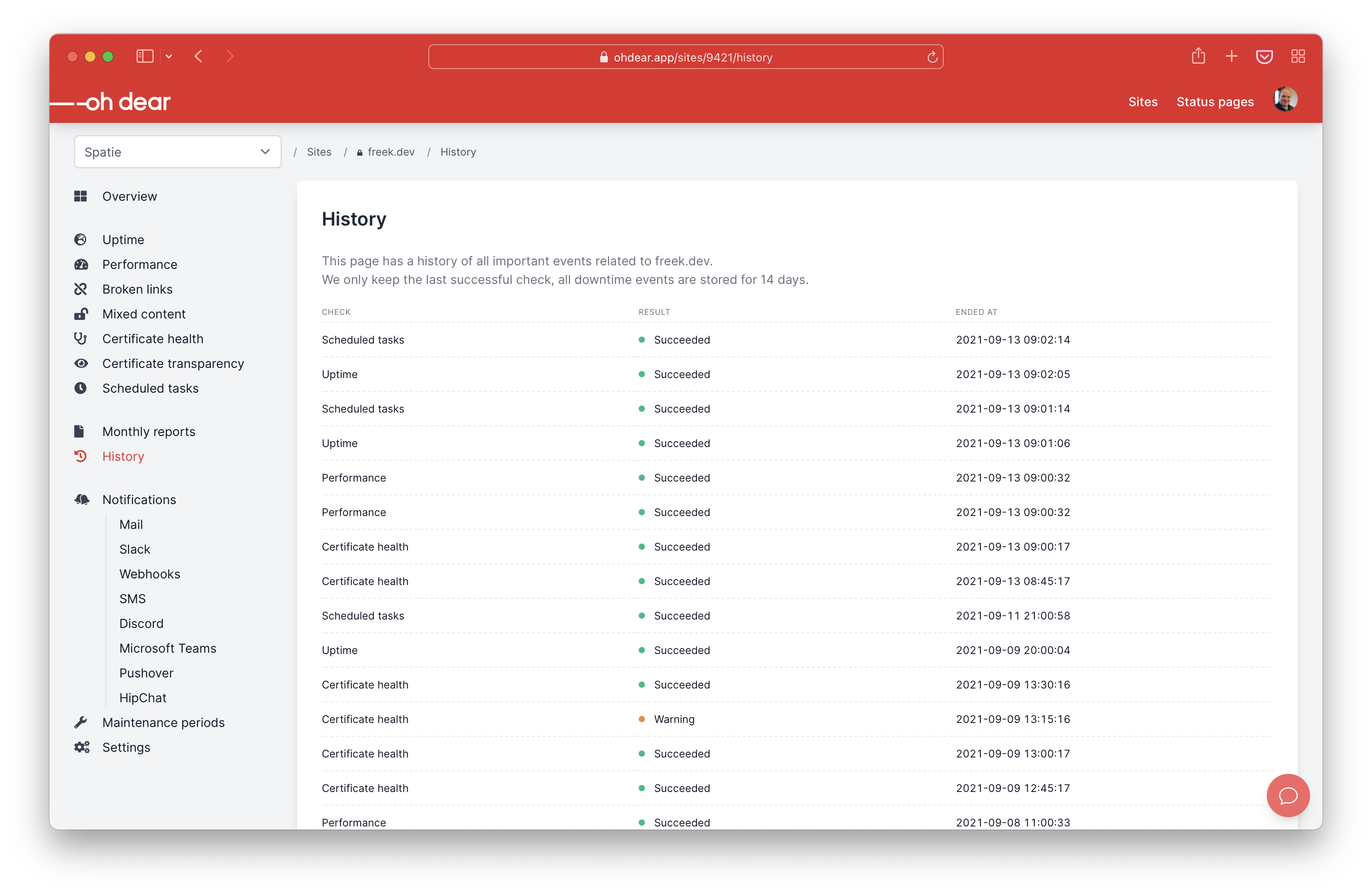

The runs table is probably the biggest one in Oh Dear. For each check we perform (and we perform tens of thousands of checks every minute), a record is inserted. We use this information on past runs to determine if we should send a notification (we only do that after a few failed runs). These runs are also used on the history screen where our users can check the history of all checks we perform.

The runs table contains millions of rows. Because we perform checks all the time, it is very much a "living" table, where new data is inserted and cleaned up continously.

Having to update the results field from the old value to the new value for all records would take a lot of time. That's why we opted for another strategy: something that we call a "just in time upgrade".

Whenever we call getResults on a Run model and detect that it is still in the old format, we quickly convert it to the new format.

public function getResults(): CheckResults

{

if ($this->resultsAreStoredInOldFormat()) {

$this->updateResultsToNewFormat();

}

return new CheckResults($this->results)

}

We can get away with a "just in time" upgrade because results column isn't used in queries, but only on that history and detail of a run screens.

In my mind this isn't really a mind-blowing technique, but I hope this example of how we handled things can help you think out of the box: you don't always need to convert everything in the database to a new format.

Closing thoughts

With all these changes, we have drastically improved the performance of our entire app. Adding a bit of cache at the right places, and preloaded bits of info, got us a long way. We're now confident that our app will be able to handle our continued growth and new features we'll be adding soon.

Now is the perfect time to try out Oh Dear. We can send you a notification whenever your app is offline, your SSL certificate will expire or is expired, whenever a broken link appears on your site, and much more. You can also create awesome looking public status pages, like this one from Flare. We aim to be the all-in-one service for monitoring an app.

We hope you've enjoyed reading this post and learned something new to improve the performance of your app.