Skipping tests conditionally in Pest

In this blog post, I'd like to show the easiest way to skip tests conditionally in tests. Using the simple technique, which can be used for other things besides skipping tests, you can make your tests much more flexible.

Exploring our use case

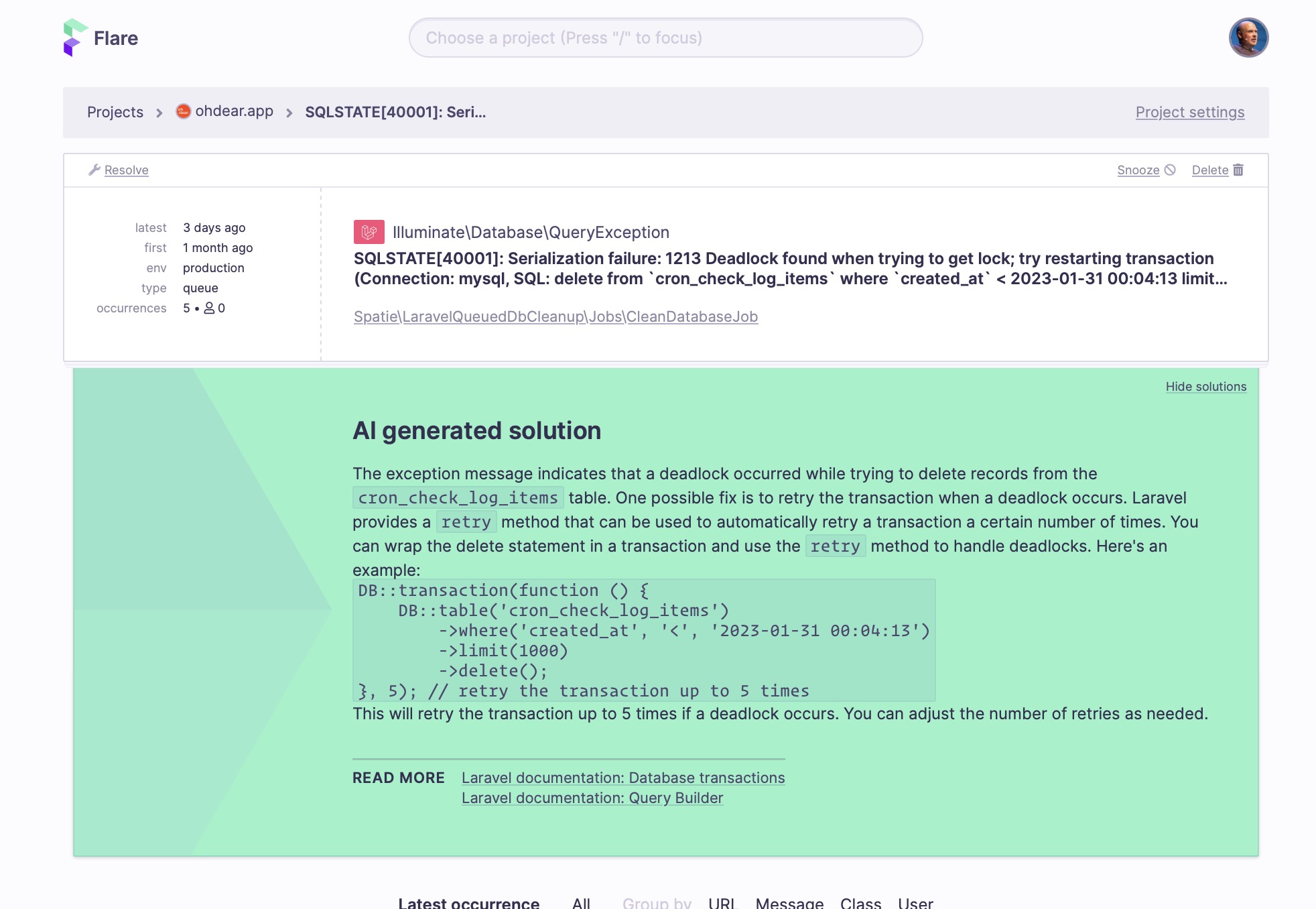

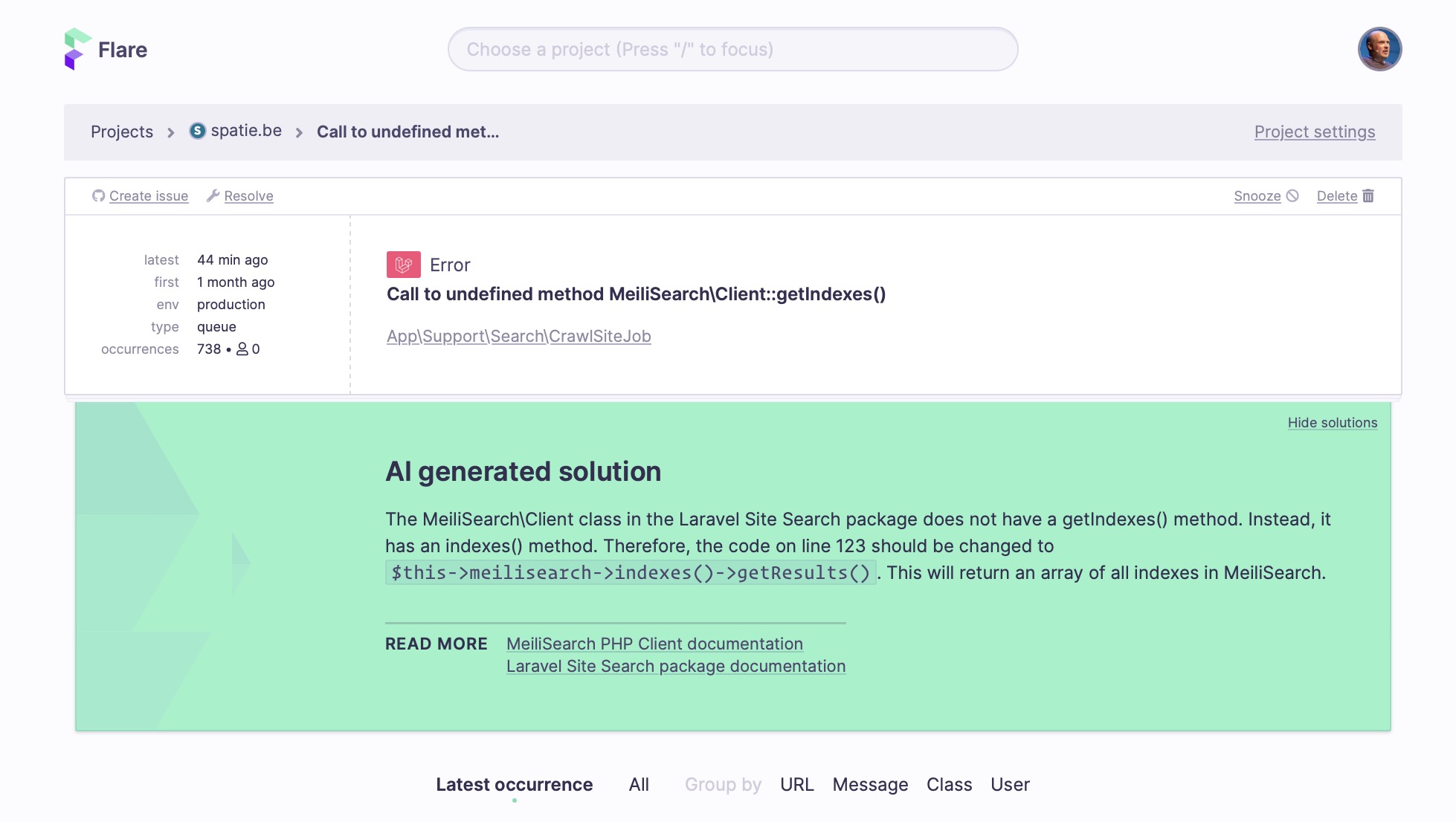

I'm currently working on adding AI solutions to Flare. When this feature is complete, Flare users will get AI-powered solutions out of the box with their Flare subscription. Here are a couple of examples.

Here, the AI suggested adding some code to retry a call when a deadlock occurs.

In this example, the AI detects that we make a wrong method call, and it suggest the right call from the package. It also includes a useful links to the docs.

Please note that this layout isn't final yet. When this feature is finished, we'll add a warning that an AI solution might not be 100% correct. Nevertheless, the results that we're seeing now are impressive.

Adding tests

For an integration with an external service, in this case, Open AI, I tend to write two types of tests.

The first type of test ensures that our code that surrounds the call with the external service works. In these tests, we don't call the actual API of the external system, but we use a fake. Things that are tested this way are:

- do we call the external service at the right time

- do we send the correct parameters to the external service

- do we process the response of the external service correctly

As mentioned above, for these tests, we use a fake. The actual service isn't called.

In the second type of test, we do call the external service. In these tests, we want to ensure that the code that performs the API call to the external service works correctly.

Here is the test that makes sure that we called open AI correctly. If Open AI can't respond with 10 to the simple 5+5 question, we'll assume we did something wrong.

it('it can get a response from open ai', function () {

$response = app(OpenAiClient::class)

->useApiKey(config('services.open_ai.api_key'))

->ask('How much is 5 + 5?');

expect($response)->toContain('10');

});

In the test above, we do make an API call to Open AI using the API key defined in config('services.open_ai.api_key'). That config key is being filled by an .env variable.

When I push this test, and a colleague pulls it in and runs it, that test fails on my colleague's machine. Why does it fail, you ask? Well, because .env isn't in version control, so services.open_ai.api_key is not set.

Instead of failing, it's better to let skip the test with a clear message that it is skipped because the necessary API key is not set.

Here's a first attempt at that.

it('it can get a response from open ai', function () {

$response = app(OpenAiClient::class)

->useApiKey(config('services.open_ai.api_key'))

->ask('How much is 5 + 5?');

expect($response)->toContain('10');

})->skip(config('services.open_ai.api_key') === null);

If you try to run this test, it will fail with this error message.

ERROR Target class [config] does not exist.

That might not be what you were expecting. This error is caused by PHP evaluating the expression passed to skip before Laravel is booted up. The config function will reach into the IoC container, which isn't built up at that point.

We simply need to wrap it in a closure to delay the evaluation of the expression passed to skip to a moment when Laravel is booted up.

it('it can get a response from open ai', function () {

$response = app(OpenAiClient::class)

->useApiKey(config('services.open_ai.api_key'))

->ask('How much is 5 + 5?');

expect($response)->toContain('10');

})->skip(fn() => config('services.open_ai.api_key') === null);

With this change, the test will pass, but only on a machine where config('services.open_ai.api_key') is set.

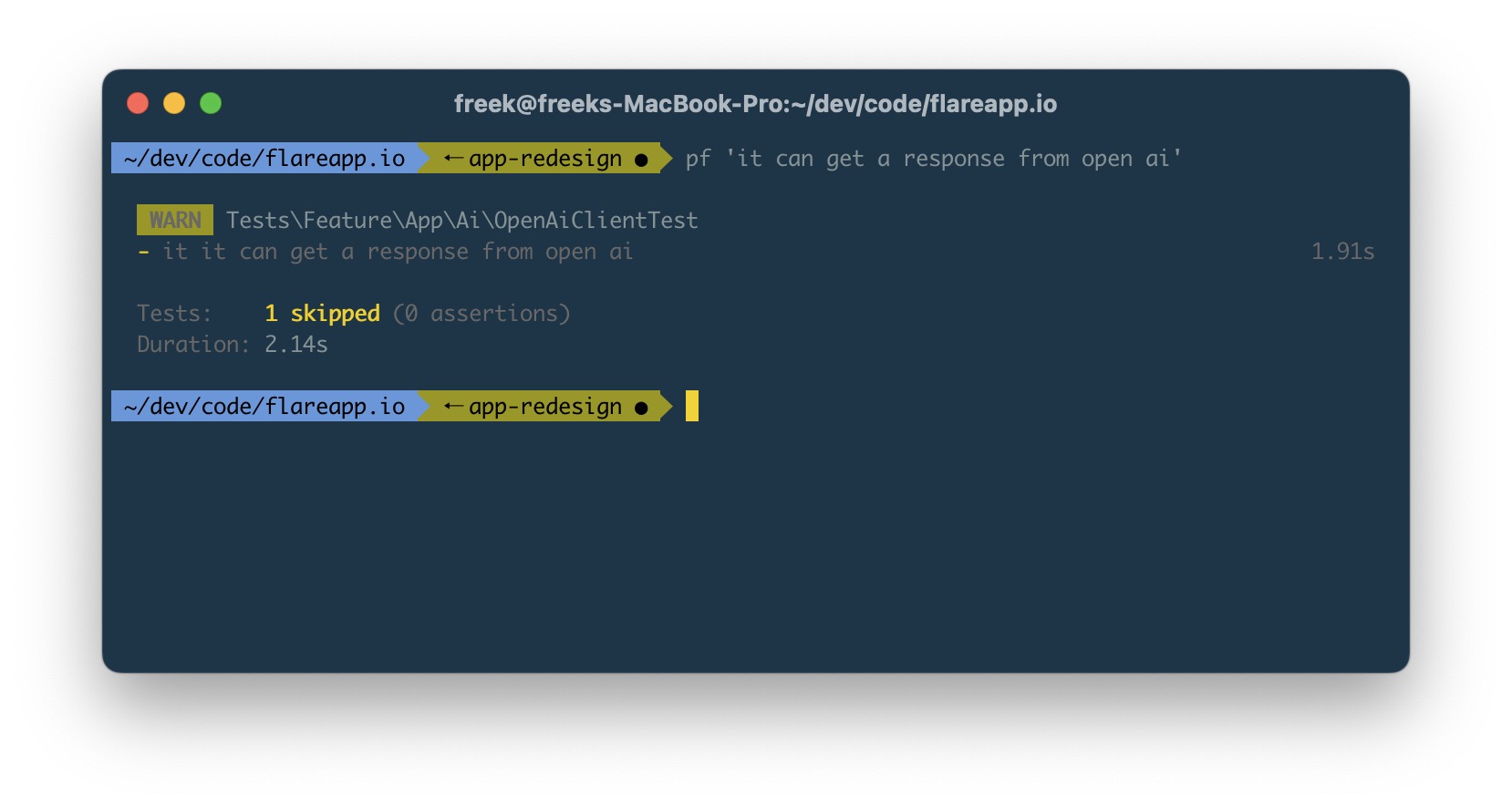

Let's now see what happens running the tests on a machine where config('services.open_ai.api_key') is not set.

The output states that the test was skipped, which is good, but it doesn't specify why. Luckily, you can pass the reason as a second argument.

it('it can get a response from open ai', function () {

$response = app(OpenAiClient::class)

->useApiKey(config('services.open_ai.api_key'))

->ask('How much is 5 + 5?');

expect($response)->toContain('10');

})->skip(

fn() => config('services.open_ai.api_key') === null,

'Skipping test because config key `services.open_ai.api_key` is not set'

);

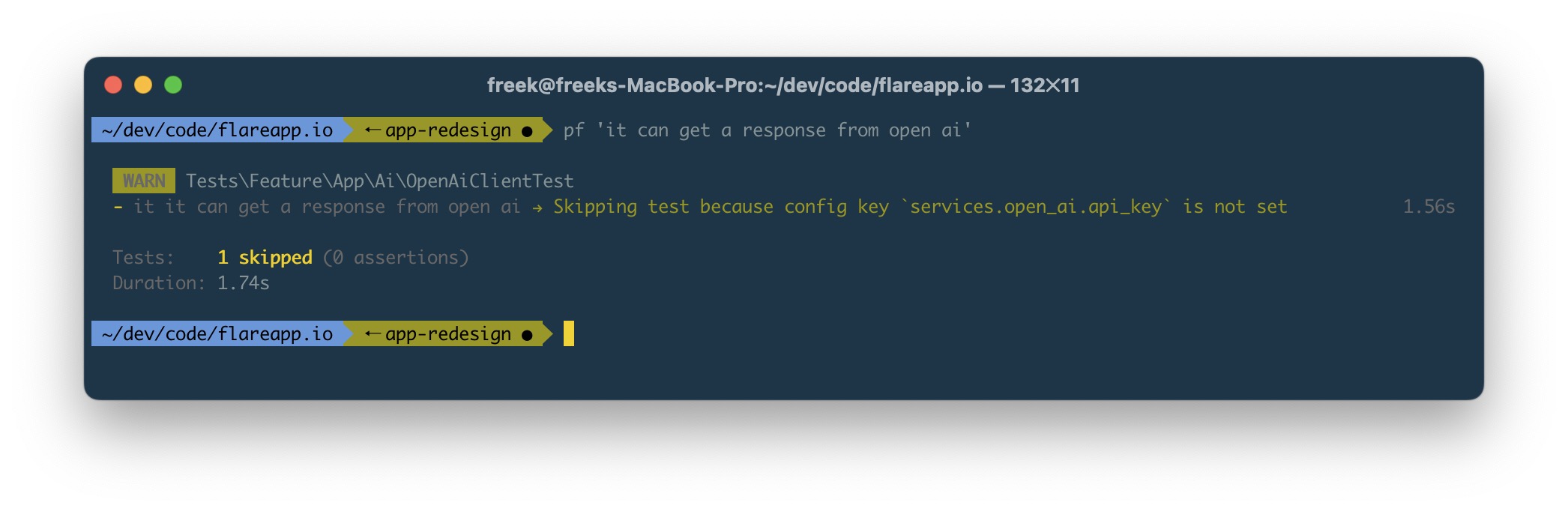

When running the test now, the reason why the test is skipped is displayed.

That's nice! But I don't like that the arguments passed to skip are rather bulky. Imagine you want to skip some other tests based on config values being set. It's pretty verbose to keep repeating this code.

Pest to the rescue

Fortunately, Pest has got our back. A lesser-known feature is that you can tack on any function you want after a test, so you're not limited to skip(), or todo().

The above test could be refactored to this one, where we use a function called skipIfConfigKeyNotSet.

it('it can get a response from open ai', function () {

$response = app(OpenAiClient::class)

->useApiKey(config('services.open_ai.api_key'))

->ask('How much is 5 + 5?');

expect($response)->toContain('10');

})->skipIfConfigKeyNotSet('services.open_ai.api_key');

Of course, we should also define that skipIfConfigKeyNotSet. You could define the function anywhere, but a typical place would be the Pest.php file. Inside that function, you can use test() to call anything you'd call in a regular test.

function skipIfConfigNotSet(string $key)

{

if(config($key) == null) {

test()->markTestSkipped("Config key {$key} not set");

}

}

With this in place, we now have a convenient way to skip a test if a config variable is not set: we can tack on skipIfConfigKeyNotSet on any tests where it's needed.

Using our package

You can go one step further. At Spatie, we've created a package called spatie/pest-expectations containing custom expectations and test helper functions used across our projects. By packaging these up, we don't have to define these functions in all projects individually.

With the package installed, you can use the whenConfig function (the equivalent of skipIfConfigNotSet above).

Here's how you can use it.

it('it can get a response from open ai', function () {

$response = app(OpenAiClient::class)

->useApiKey(config('services.open_ai.api_key'))

->ask('How much is 5 + 5?');

expect($response)->toContain('10');

})->whenConfig('services.open_ai.api_key');

The package offers these similar functions that can be tacked on to any test:

-

whenEnvVar($envVarName): only run the test when the given environment variable is set -

whenWindows: the test will be skipped unless running on Windows -

whenMac: the test will be skipped unless running on macOS -

whenLinux: the test will be skipped unless running on Linux -

whenGitHubActions(): the test will be skipped unless running on GitHub Actions -

skipOnGitHubActions(): the test will be skipped when running on GitHub Actions -

whenPhpVersion($version): the test will be skipped unless running on the given PHP version, or higher. You can pass a version like8or8.1.

In closing

I hope you enjoyed learning about this small test optimization. Hat tip to the amazing Luke Downing, who pointed me to the fact that you can tack on any function after a test.

If you want to know more about testing with Pest, check out our premium video course Testing Laravel, recently updated for Pest v2. Happy testing!